Since the creation of NASA in 1958, American spacecraft designers have all faced the face dilemma : should we add more automatic controls to improve reliability ; or more human controls to keep astronauts in the loop ?

Through Mercury, Gemini or Apollo, NASA engineers have overcome this conundrum by creating interfaces that combine both the computations of machines and the flexibility of astronauts. The evolution of these collaborative devices has much to teach us on how to team up humans and machines.

Here’s an historical description of the NASA on-board guidance systems that achieved their missions by playing on the human/machine complementarity.

The automatic controls of Project Mercury

In 1957, after the launch of Sputnik 1 satellite into space, and in the middle of a Cold War space race, the newly created NASA had a mission of great importance: bring for the first time an American into orbit.

A few years before, the success of the X-15 operations, proved the talent of the American pilots to achieve suborbital flights. Yet, these accomplishments were not enough to erase the doubts of NASA leaders such as Wernher von Braun. This former German engineer, specialized in guided missiles, was convinced that automatic guidance systems were more precise and reliable than astronauts in orbital flights.

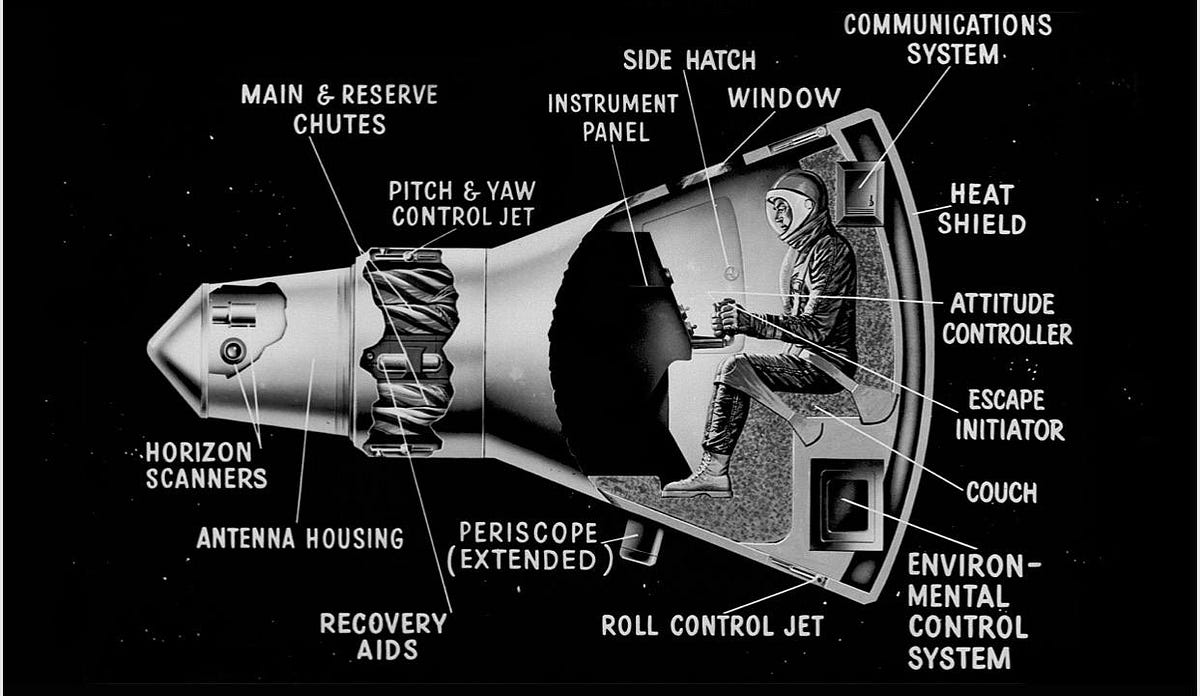

As a result, as part of Project Mercury, engineers built native automatic controls into the operation of the spacecraft. While the guidance system submitted data on earth, computers handled calculations and sent back the right commands to the spacecraft. This way, it could manage position and trajectory, as well as conducted launch and re-entry operations.

However, the Mercury spacecraft had two other redundant control systems. During the re-entry phases, the astronauts could use manual controls to change the attitude or trigger the retrorocket in case of deficiency. But engineers also added a more flexible control mode to Mercury. This guidance system by mixing automatic and manual controls helped managed the oscillations of the superorbital flights. It worked like a “fly-by-wire” mode, where pilots could turn on or off at will the automatic guidance of the probe, but could also control their vehicle by electronically-regulated feedback.

If the Mercury project saw the essential domination of the automatic controls, the interface nevertheless kept human controls, and astronauts especially used it during the last flight. Astronaut Gordon Cooper managed successfully to re-entry by using the fly-by-wire controls.

And so this success guided NASA towards new ways of combining human and machine capabilities.

Gemini and the triumph of fly-by-wire controls

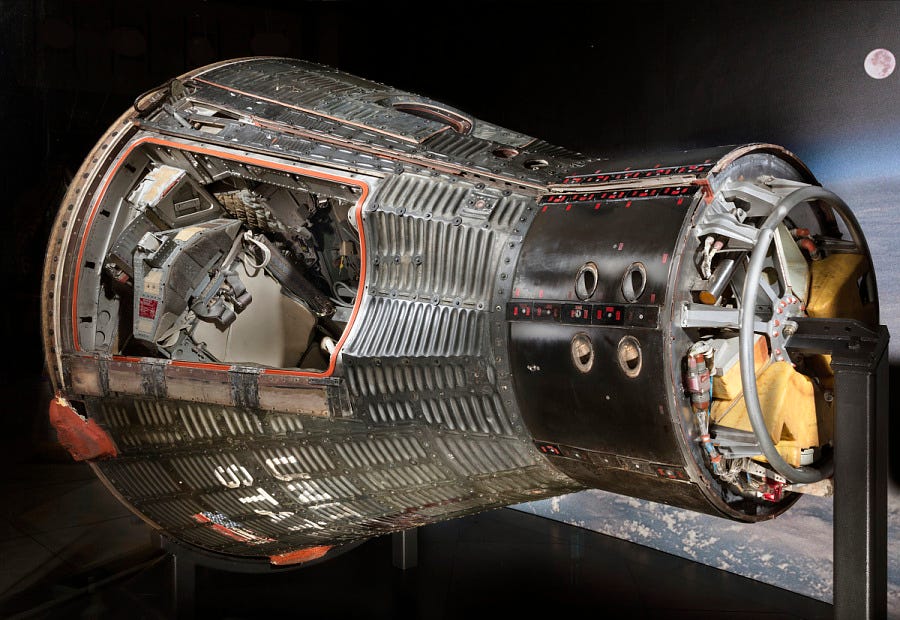

Following Mercury, NASA launched Project Gemini to support space transportation experiments and to prepare the ambitious Apollo project, which was set to land humans on the moon.

Noting the success of human controls during Mercury, engineers decided to make a lot of room for them in the Gemini capsule. Thus, contrary to Mercury, Gemini could ask for the astronauts’ command at any time, whether to abort an operation or to choose the time and position. The pilots also had to manage the position controls during the whole operation, guiding the vehicle during the landing on the earth, managing the rendezvous operations in space, even manually maneuvering during the re-entry phases.

These last manipulations proved, however, more complicated for astronauts. The re-entries often ended at kilometers of the point of destination, and the dynamics of the rendezvous required counter-intuitive responses from the pilots (for example, slowing down to change orbit and join a forward vehicle).

That’s why Gemini’s engineers built the first on-board digital computer into the vehicle, to help with maneuvers. Based on the data from the trip, they gave the pilots the right commands to reach their rendezvous target and successfully re-enter. Thanks to this assistance, the pilots successfully managed all Gemini missions. They even managed to resolve perilous situations, as during Gemini XII where Buzz Aldrin achieved rendezvous without the help of the radar.

These experiments therefore paved the way for Apollo’s digital human-machine interface.

Building Apollo’s man-machine collaborative interface

In 1961, the Apollo project followed the Gemini project with a greater ambition dictated by Kennedy’s speech: to put men in orbit on the moon, then to land them on its surface.

Such a challenge raised many questions : how to keep the trajectory of the spacecraft constant during such a long journey ? And how to achieve the delicate operation of the lunar landing ?

The answer of the NASA engineers and quickly of the MIT researchers was to develop a digital guidance computer which calculates and controls in real time the position and trajectory of the spacecraft. Besides saving space and fuel consumption, this computing power made it possible to automate numerous complex operations.

However, the role of the astronauts was not left aside. This automated guidance system raised the question of the redundancy of the hardware, but also of the software. The programs coded by the developers of the MIT could face many technical issues.

In this respect, pilots proved to be the ultimate backbone of the computer system. They supervised its good functioning, initiating and double-checking the delicate operations, and confirming the changes of piloting mode. All those tasks were processed through an essential tool: the interface of the Apollo Guidance System, the “Display and Keyboard” (DSKY).

This monitor had a digital display, indicator lights and a calculator-like keyboard. The commands were entered numerically, in the form of numbers with two digits: Verb and Noun. Verb described the type of action to be performed and Noun specified which data were affected by the action specified.

With it, astronauts could check trajectory and time data, change navigation modes, or recalibrate an operation with a simple command. They just had to type the right command number to access the desired data or mode.

Although these controls were complex (requiring memorization of a numerical list), mastering this interface gave the astronauts full control of the Apollo spacecraft. Some astronauts, such as Jim Lovell, mastered it so well that they were able to perform commands in succession and adapt in a matter of moments.

And this is the first example of a true collaborative interface between human and machine in space.

The next step of spacecraft controls

What is the future of control tools in spacecraft? NASA and other space organizations have already included digital and touch-based user interfaces into their design.

They give astronauts the power to check, correct and modify the automatic operations of modern spacecraft with greater ease and readability.

But with the development of Unmanned Aerial Vehicles, humans are also finding their place more distant from the mission site. Except for lunar and Martian travel ambitions, space organizations are increasingly favoring remote interfaces, which allow exploration of environments based entirely on automatic movement.

Are these remote pilots less heroic than the Apollo pilots? Nothing is less sure, while operations require constant planning and monitoring of systems.

In any case, human-machine interfaces, combining the computing power of computers and the flexibility of human controls, still have a bright future ahead of them!