The mission of UX design has always been the same: creating an interface to help users achieve their goal. But how do you create such an interface in interaction with intelligent and ever-evolving robots?

Whether it’s delivery robots or self-driving cars, robot designers will need to create affordances between the human and the machine to enable control and feedback. This means making it possible for users to learn in collaboration with their interface. This challenge is even more complex for users who don’t have the necessary training and are not used to the behavior of smart devices.

Here are some ways to create collaborative interfaces that create a positive and useful experience.

The Specificities of the User-Interface Interaction

If there is one area where user interfaces have constantly evolved, it is aviation. While in the 1950s, pilots still had to perform most of the operations manually, over the years piloting systems have been increasingly automated. This has triggered the first reflections around human-machine collaboration, and especially defining the priority between the pilots’ commands and the autopilot.

Faced with this question, aviation manufacturers Boeing and Airbus came up with two different solutions. Airbus decided to adopt a hard autopilot system, which defines and controls entirely delicate operations like takeoff and landing. The pilot does not have any ability to control the aircraft. In another perspective, Boeing has built in a flexible system that allows each pilot to always activate manual commands. They control operations if the situation requires it.

Which model of human-machine interaction has proven to be the safest and most effective? As it turned out, Boeing’s model have saved some crews from perilous situations. Because the pilots have the ability to take over manual control, they use ingenious ways to solve unexpected situations. For example, in 1985, the pilot of a China Airlines Boeing successfully regained control of his aircraft after an engine failure. He deactivated the automated controls and manually stabilized the aircraft’s trajectory.

This story shows how important it is to leave room for collaboration between pilot and automation, through a well-thought-out interface. The pilot must have an interface that gives him the essential information, without distracting his thoughts and leaving him active. He needs to keep a minimal control on his aircraft so to stay focus and be able to intervene quickly.

But this question is framed differently at the level of average users, who don’t have the knowledge and training an expert of the system has.

Machine Interaction and Human Passersby

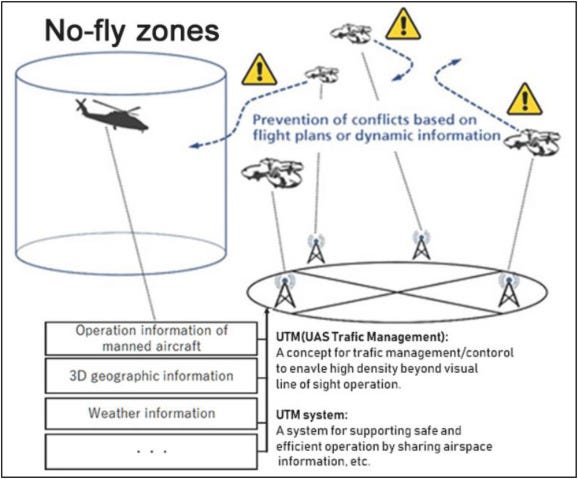

Various drones and bots are already circulating in our cities to monitor high-risk building or detect vandalism. In San Francisco, delivery robots are allowed to travel on sidewalks within specific locations. If the experts are familiar with these devices, what about passersby who might be inconvenienced or even injured by their behavior ?

In July 2016 in Palo Alto, a child attracted by a flying security drone headed towards it. Although he tried to avoid it, the child took the same direction and the two collided. This accident, which fortunately did not result in any serious injury, shows how difficult it is for an intelligent system to predict human movements.

It shows the challenge of ensuring the conditions for a safe and smooth human-machine interaction. And for this, designers can rely on behavioral science and their findings about human-human interaction.

For example, when two people pass each other in the street, they share a common mental model that allows them to predict each other’s movements. In the same way, designers must think about both the capabilities of the machines and the signals sent to the users.

Robots must learn to detect more varied signals from users, such as “Stop!” interjections or their signs of impatience or distraction. On the other hand, they need to incorporate signals that allow users to guess the robot’s intention. For example, an obvious noise or a lighted banner can help the robot communicate.

But it is also about making the appearance and movement more predictable to the eye of the average user.

Robot Design and Affordances

This whole issue comes finally down to designing affordances on automation systems.

These affordances allow any user to take control of their interface. They were previously in the form of buttons on analog interfaces, then icons on digital interfaces. What form will they take in the case of an intelligent and dynamic system?

Whether touch, voice or gesture recognition interfaces, the important thing is to make the use as natural and accessible as possible to the most people. For example, a robot that supervises operations in a hospital need to react quickly to a nurse who perform emergency care or who calls it to order.

One solution is to make them able to detect the warning signs of nurses. Another, more preventive solution is to give the robot certain appearances and behaviors that remind users of human behavior. This will give the users mental models that they already know, and they will be able to predict the robot much better. Self-driving cars, for example, have already learned to make movements that are more natural and human-like, and that give confidence to passengers crossing the road.

These kinds of dynamic affordances will also be able to use motion and voice detection to adapt to users. For example, Amazon is already thinking about delivery drones that react to the gestures of passers-by to anticipate their movements. But the easiest way is still to adapt the environment to the robot’s processing.

Conceiving Intelligent Environment

Human-machine interactions are still very complex and unpredictable. So why not frame them in an environment that dictates the rules and limits of these interactions?

Just as our roads are defined by driving rules and our airspaces are strictly delimited, robots need dedicated spaces in cities and buildings to orient themselves and not disturb users. Some designers have already created virtual fences that make the robot stop when it gets too close to humans, especially in factories. Amazon’s warehouses are also home to order-picking robots that navigate by following paper directions on the floor. These devices allow human workers to collaborate with the robots, thus increasing their productivity without risking their lives.

We can extend these ideas to the creation of dedicated sidewalks in cities for delivery robots, or highway lanes reserved for self-driving cars. Even further, we can imagine smart cities entirely dedicated to experimentation around human-machine interaction. Inhabitants would agree to live there and take the risk of being around robots and drones all day long. They would provide crucial feedback to improve upcoming robot prototypes.

All these ideas would allow human-machine collaboration to flourish. And that’s how robotic intelligence and human dexterity combined will meet the challenges of tomorrow!